Solution for simultaneous localization and targets mapping optimized for aerial surveillance

Method to estimate accurately the position and metrics (length, width, and height) of any target.

Short Description

Novel method to estimate accurately the position and metrics (length, width, and height) of any target feature appearing in an aerial image. The method is also capable of retrieving the coordinates of the air vehicle when the image is acquired.

Descriptive information, such as position and dimensions, can greatly support the reconnaissance and classification of target features. The method can operate in a GPS-denied environment and support navigation by providing the coordinates of air vehicles.

The main features of the method are:

- Visual-inertial based. No need for GPS or other GNSS information;

- Extremely accurate (up to centimetric accuracy);

- Moving targets can be considered (people, vehicles, vessels, animals, etc.);

- Very fast since only two reference points are needed for camera resectioning;

- Fully described mathematically (no black box).

It has been extensively tested in real environments for validation and accuracy analysis. The results of the tests have been published in an international scientific journal (IEEE Sensors see [1] in the references).

Technology Overview

A single optical image acquired by a monocular optical camera is needed. The focal length and orientation of the camera must be known. The position of the camera (e.g., GPS coordinates) is not needed and the method can work in GPS-denied environments.

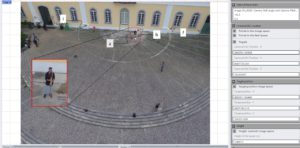

Only two reference points are enough to determine the world coordinates of the air vehicle when the image was acquired. Digital elevation models or 3D city models can be used to define the reference points. Once the position of the air vehicle is known, the world coordinates and dimensions of any target appearing in the image can be retrieved (see Figure 1).

As reported in [1] the method may be extremely accurate, up to centimetric accuracy. The uncertainty is principally related to the quality of the elevation data. For example, if the digital elevation model has an uncertainty of 5cm, like LiDAR, the accuracy of the method will range between 0 and +/- 3cm. If the uncertainty of the digital elevation model is 2.0m, the accuracy of the method will range between +/- 20cm and +/- 60cm.

Figure 1: Aerial image used to test and validate the method’s prototype. The target feature is a measuring pole of 1.80m standing vertical from ground (see enlarged detail in the rectangle). Points i and l were used as reference to retrieve the coordinates of the camera/UAV. Point a (bottom of the pole on ground) was considered to retrieve the position of the target. Point b (top of the pole) was used to retrieve the height of the pole. Further details, including aggregated results of the tests conducted, are published in [1].

Computer vision algorithms can be used for the selection of reference points and detection of targets of interest in the imagery (e.g., people detection) to automatize the entire process.

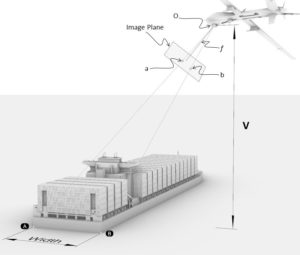

In maritime applications, where it is not possible to get reference points, it is still possible to use the method for target mapping. Assuming the sea surface is perfectly flat, the method needs only the altitude (determined by air vehicle via barometric data) and intrinsic camera parameters (focal length and orientation) to estimate the targets’ dimensions (see Figure 2).

Figure 2: In maritime applications the method is capable to estimate targets’ dimensions, such as the width of vessel (A-B), assuming that the sea surface is perfectly flat. World points A and B are represented by points a and b in the image plane. The elevation of the aerial asset V mist be known along with intrinsic camera parameters such as orientation of the camera (O) and focal length (f).

Stage of Development

TRL 5 – Process validation in relevant environment.

Opportunity

- Licensing.

- Co-development.

Further Details

[1] Tonini, M. Painho and M. Castelli, “Method for estimating targets’ dimensions using aerial surveillance cameras,” in IEEE Sensors Journal, doi: 10.1109/JSEN.2023.3325725. Link to Accepted Authors’ version.

Intellectual Property

Priority: 15/07/2021

NOVA Inventors

Andrea Tonini

Marco Painho

Mauro Castelli